pyOptSparse

Introduction

Although we specialize in optimization, we don’t write our own optimization algorithms. We like to work a little closer to the applications side, and we figure that the mathematicians have already done an exceptional job developing the algorithms. In fact, there are many great optimization algorithms out there, each with different advantages. From the user’s perspective most of these algorithms have similar inputs and outputs, so we decided to develop a library for optimization algorithms with a common interface: pyOptSparse. pyOptSparse allows the user to switch between several algorithms by changing a single argument. This facilitates comparison between different techniques and allows the user to focus on the other aspects of building an optimization problem.

In this section, we will go over the basic pyOptSparse optimization script and try our hand at the familiar Rosenbrock problem.

Files

Navigate to the directory opt/pyoptsparse in your tutorial folder.

Create the following empty runscript in the current directory:

rosenbrock.py

Optimization Problem Definition

We will be solving a constrained Rosenbrock problem defined in the following manner:

Dissecting the pyOptSparse runscript

Open the file rosenbrock.py in your favorite text editor.

Then copy the code from each of the following sections into this file.

Import libraries

from pyoptsparse import OPT, Optimization, History

import numpy as np

import argparse

import matplotlib.pyplot as plt

First we import everything from pyOptSparse.

Additionally we import argparse to enable the use of command line arguments.

Command line arguments

parser = argparse.ArgumentParser()

parser.add_argument("--opt", type=str, default="slsqp")

args = parser.parse_args()

We often set up scripts with the command line arguments to allow us to make subtle changes to the optimization without having to modify the script. In this case we set up a single command line argument to choose the optimizer that we will use to solve the optimization problem.

Define the callback function

def userfunc(xdict):

x = xdict["xvars"] # Extract array

funcs = {}

funcs["obj"] = 100 * (x[1] - x[0] ** 2) ** 2 + (1 - x[0]) ** 2

funcs["con"] = 0.1 - (x[0] - 1) ** 3 - (x[1] - 1)

return funcs

For any optimization problem, pyOptSparse needs a way to query the functions of interest at a given point in the design space.

This is done with callback functions.

The callback function receives a dictionary with the design variables and returns a dictionary with the computed functions of interest.

The names of the design variables (xvars) and functions of interest (obj and con) are user-defined, as you will see in the next few steps.

Define the sensitivity function

def userfuncsens(xdict, funcs):

x = xdict["xvars"] # Extract array

funcsSens = {}

funcsSens["obj"] = {

"xvars": [2 * 100 * (x[1] - x[0] ** 2) * (-2 * x[0]) - 2 * (1 - x[0]), 2 * 100 * (x[1] - x[0] ** 2)]

}

funcsSens["con"] = {"xvars": [-3 * (x[0] - 1) ** 2, -1]}

return funcsSens

The user-defined sensitivity function allows the user to provide efficiently computed derivatives to the optimizer.

In the absence of user-provided derivatives, pyOptSparse can also compute finite difference derivatives, but we generally avoid that option.

The sensitivity function receives both the dictionary of design variables (xdict) and the previously computed dictionary of functions of interest (funcs).

The function returns a dictionary with derivatives of each of the functions in funcs with respect to each of the design variables in xdict.

For a vector variable (like xvars), the sensitivity is provided as a Jacobian.

Instantiate the optimization problem

optProb = Optimization("Rosenbrock function", userfunc)

The Optimization class holds all of the information about the optimization problem. We create an instance of this class by providing the name of the problem and the callback function.

Indicate the objective function

optProb.addObj("obj")

Although we have already set the callback function for the optimization problem, the optimizer does not know which function of interest it should be minimizing.

Here we tell the optimizer the name of the objective function.

This should correspond with one of the keys in the funcs dictionary that is returned by the callback function.

Add design variables

optProb.addVarGroup(name="xvars", nVars=2, varType="c", value=[3, -3], lower=-5.12, upper=5.12, scale=1.0)

Now we need to add the design variables to the problem, which is done with a call to addVarGroup().

Add constraints

optProb.addCon("con", upper=0, scale=1.0)

We complete the optimization problem set-up by adding the constraints, using the function addCon().

Set up the optimizer

We now have a fully defined optimization problem, but we haven’t said anything about choosing an optimizer. The optimizer is set up with the following lines of code. The options dictionary can be modified to fine-tune the optimizer, but if it is left empty, default values will be used.

optOptions = {}

opt = OPT(args.opt, options=optOptions)

Solve the problem

Finally, we can solve the problem.

We give the optimizer the optimization problem, the sensitivity function, and optionally, a location to save an optimization history file.

The sens keyword also accepts 'FD' to indicate that the user wants to use finite difference for derivative computations.

sol = opt(optProb, sens=userfuncsens, storeHistory="opt.hst")

print(sol)

Run it yourself!

Try running the optimization.

python rosenbrock.py

Terminal output

Optimization Problem -- Rosenbrock function

================================================================================

Objective Function: userfunc

Solution:

--------------------------------------------------------------------------------

Total Time: 0.0516

User Objective Time : 0.0002

User Sensitivity Time : 0.0002

Interface Time : 0.0476

Opt Solver Time: 0.0035

Calls to Objective Function : 31

Calls to Sens Function : 26

Objectives

Index Name Value

0 obj 2.371563E-03

Variables (c - continuous, i - integer, d - discrete)

Index Name Type Lower Bound Value Upper Bound Status

0 xvars_0 c -5.120000E+00 1.048645E+00 5.120000E+00

1 xvars_1 c -5.120000E+00 1.099885E+00 5.120000E+00

Constraints (i - inequality, e - equality)

Index Name Type Lower Value Upper Status Lagrange Multiplier (N/A)

0 con i -1.000000E+20 -5.669922E-08 0.000000E+00 u 9.00000E+100

--------------------------------------------------------------------------------

The optimizer (SLSQP) also writes out an output file SLSQP.out that contains additional information.

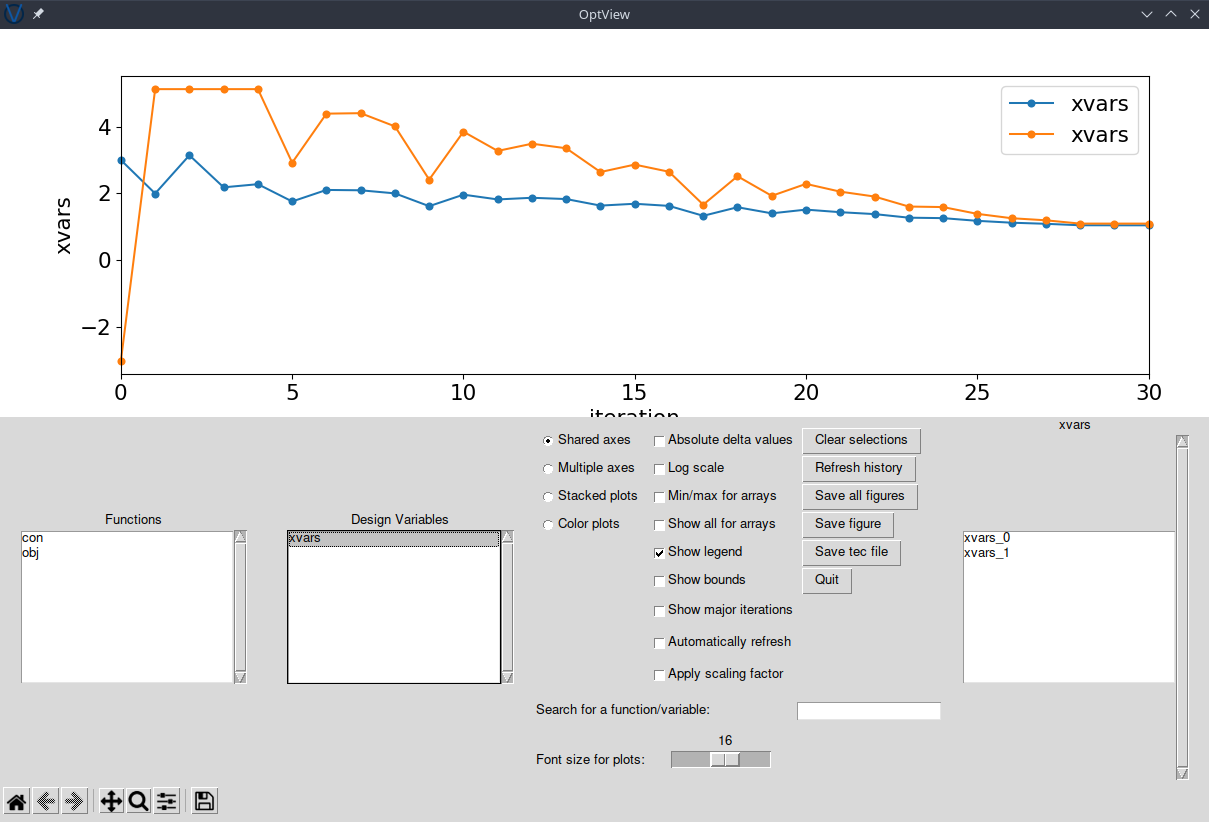

Visualization with OptView

pyOptSparse comes with a simple GUI to view the optimization history called OptView. You can run it with the command

optview opt.hst

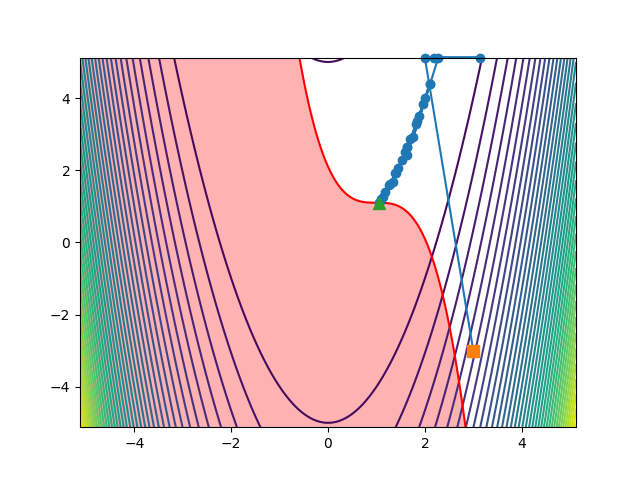

You can also load the history file in python to make your own plots, such as the one below, showing the path the optimiser took to the solution:

# Load the history file

optHist = History("opt.hst")

values = optHist.getValues()

# Plot contours of the objective and the constraint boundary

x = np.linspace(-5.12, 5.12, 201)

X, Y = np.meshgrid(x, x)

objFunc = 100 * (Y - X**2) ** 2 + (1 - X) ** 2

conFunc = 0.1 - (X - 1) ** 3 - (Y - 1)

fig, ax = plt.subplots()

ax.contour(X, Y, objFunc, levels=40)

ax.contour(X, Y, conFunc, levels=[0.0], colors="r")

ax.contourf(X, Y, conFunc, levels=[0.0, np.inf], colors="r", alpha=0.3)

# Plot the path of the optimizer

ax.plot(values["xvars"][:, 0], values["xvars"][:, 1], "-o", markersize=6, clip_on=False)

ax.plot(values["xvars"][0, 0], values["xvars"][0, 1], "s", markersize=8, clip_on=False)

ax.plot(values["xvars"][-1, 0], values["xvars"][-1, 1], "^", markersize=8, clip_on=False)

fig.savefig("OptimizerPath.png")